Getty Images

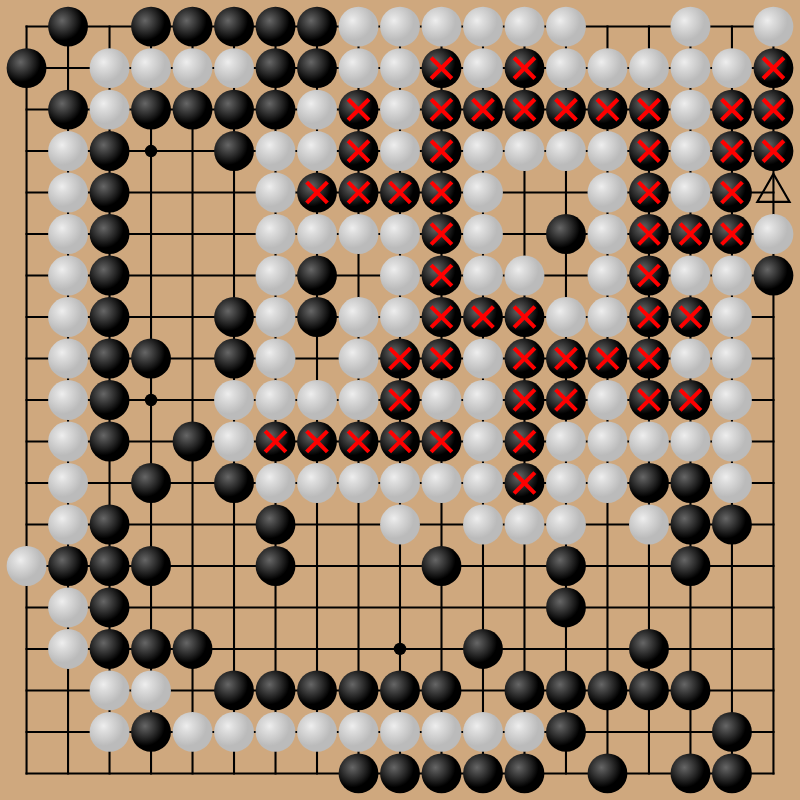

In ancient Chinese games GoSince at least 2016, state-of-the-art artificial intelligence has generally been able to beat the best human players. But in the past few years, researchers have discovered flaws in these top-level AIs Go Algorithms that give humans a fighting chance. By using unconventional “cyclical” strategies – which even a novice human player can recognize and beat – a clever human can often exploit flaws in the strategy of a top-level AI and put the algorithm at a disadvantage.

Researchers at MIT and Headlight They wanted to see if they could improve this “worst-case” performance in an otherwise “supernormal” AI Go algorithm, testing three methods to strengthen the top-level. Katago AlgorithmSecurity against adversarial attacks. The results show that it can be difficult to create truly strong, unsupervised AI, even in tightly controlled fields such as board games.

Three failed strategies

in a pre-print paper ” be able to Go Can AI become adversarially strong?The researchers aim to create a device that Go An AI that is truly “robust” against any and all attacks. This means an algorithm that “cannot be fooled into making game-losing mistakes that no human would make” but also one that any competing AI algorithm would need to expend significant computing resources to defeat. Ideally, a robust algorithm should also be able to overcome potential exploits by using additional computing resources when it encounters unfamiliar situations.

The researchers used three methods to produce such strong results Go algorithm. In the first, they fine-tuned the Katago model by using more examples of unconventional cyclical strategies that had previously defeated it, hoping that Katago could learn to detect and defeat these patterns after seeing them more.

This strategy initially looked promising, allowing Katago to win 100 percent of games against the cyclic “attacker.” But when the attacker itself was fine-tuned (a process that used much less computing power than fine-tuning Katago), the win rate against minor variations on the original attack fell back to 9 percent.

For their second defense attempt, the researchers repeated a multi-round “arms race,” where new adversary models discover new exploits and new defensive models attempt to close those newly discovered holes. After 10 rounds of such iterative training, the final defense algorithm still won only 19 percent of games against the final attacking algorithm, which had discovered a previously undiscovered variation on the exploit. This was true even when the updated algorithm maintained an edge against earlier attackers it had been trained against in the past.

Getty Images

In their final attempt, the researchers tried an entirely new type of training Vision TransformerThat was in an attempt to avoid the “bad inductive biases” found in the convolutional neural networks that initially trained Katago. This method also failed, winning only 22 percent of the time against a variation on the cyclic attack that “could be replicated by a human expert,” the researchers wrote.

Will anything work?

In all three defense attempts, the opponents who defeated Katago did not in general represent some new, previously unseen height Go-playability. Instead, these attacking algorithms focused on discovering exploitable vulnerabilities in an otherwise performant AI algorithm, even though those simple attack strategies would defeat most human players.

Those exploitable holes highlight the importance of evaluating “worst-case” performance in AI systems, even though “average-case” performance may seem downright superhuman. On average, Katago can dominate even high-level human players using conventional strategies. But in the worst-case scenario, otherwise “weak” opponents can find holes in the system that tear it apart.

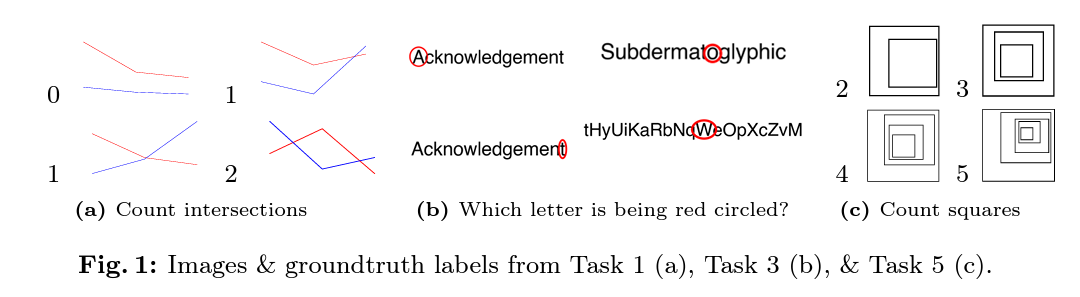

It is easy to extend this kind of thinking to other types of generative AI systems. LLs that can succeed in some complex creative and reference tasks can still succeed. Completely failing when faced with simple math problems (Or even becoming “poisoned” by malicious signals.) Visual AI models that can describe and analyze complex images can still fail miserably when presented with basic geometric shapes.

Improving on such “worst case” scenarios is crucial to avoiding embarrassing mistakes when introducing AI systems to the public. But this new research shows that determined “adversaries” can often discover new flaws in the performance of AI algorithms much faster and easier than the algorithms can evolve to fix those problems.

And if it’s true Go– a very complex game that nevertheless has tightly defined rules – this may be even more true in a less controlled environment. “The main thing for AI is that these vulnerabilities will be difficult to eliminate,” said Adam Gleave, CEO of FAR told nature“If we cannot solve the issue in a simpler domain such as GoSo it seems unlikely that we will see jailbreak-like issues fixed in ChatGPT in the near future.”

Still, the researchers are not discouraged. Although none of their methods were able to bring “breakthrough” [new] “Attacks impossible” GoTheir strategies were able to prevent a previously identified unrepairable “fixed” exploit. This suggests that “it may be possible to completely defend against any kind of Go They wrote that “it is possible to strengthen AI through training against a larger set of attacks,” and made proposals for future research that could make this possible.

However, this new research shows that making AI systems more robust to the worst-case scenarios could be at least as valuable as pursuing new, more human/superhuman capabilities.

Disclaimer : The content in this article is for educational and informational purposes only.